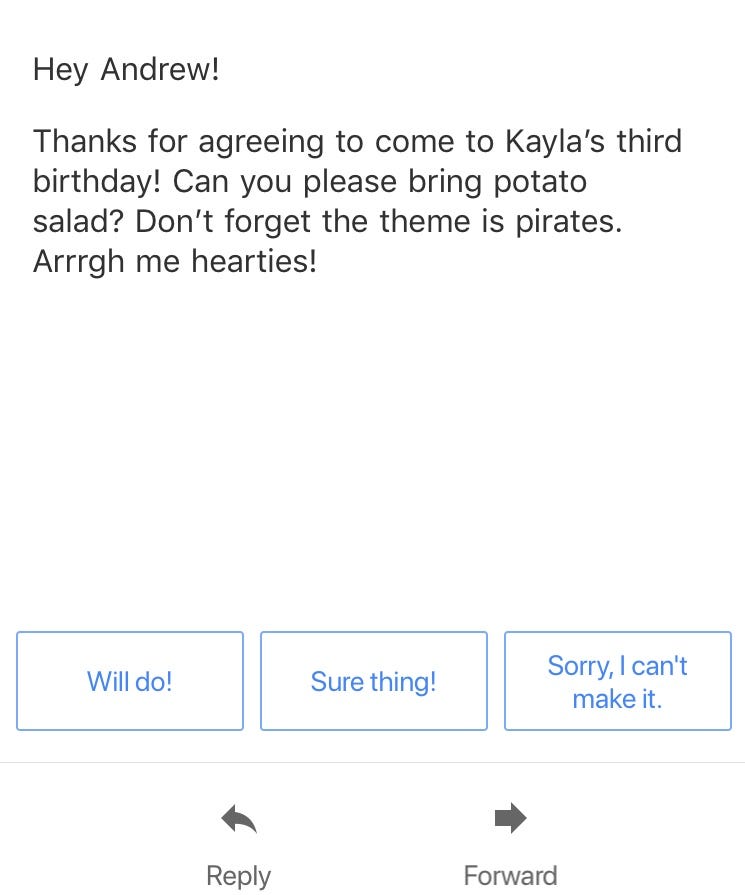

Sometime last year, Gmail introduced the Smart Reply. That’s the feature that lets you respond to an email on your phone in one tap. For example, you get an email that reads,“Hey, Andrew! Thanks for agreeing to come to Kayla’s third birthday! Can you please bring potato salad? Don’t forget the theme is pirates. Arrrgh, me hearties!”

If you’re using the Gmail app on your phone, you will be given three automated-response options. I tried this by emailing a second Gmail account Kayla’s party request. These were my one-touch choices: “Will do!”, “Sure thing!” or “Sorry, I can’t make it.”

Interestingly, someone else receiving the exact same email will be given three different responses to choose from, with nuanced differences in the “voice,” such as a slightly less enthusiastic “I’ll be there,” “I’ll try” and “Let me check.”

This is because Smart Reply builds the answers through an algorithm based on all your previous Gmail correspondence. The app scans your entire email history to attempt a good guess at how you would respond to a potato-salad pirate-party request.

So if somebody had asked me for a potato salad sometime in 2007 and I’d replied, “Shove your potatoes up your arse, Gavin,” that option could have popped up today. Possibly.

The Smart Reply claims, through machine learning, to capture diverse situations, writing styles and tones unique to each user. It even picks up on my weird transatlantic vocabulary, on different occasions offering me both “Awesome!” and “Terrific!”

After tapping your chosen reply, the person receiving your response—maybe Kayla’s mom—has no way of knowing that it wasn’t “you” who wrote the reply. You’re essentially being covertly impersonated by the machine.

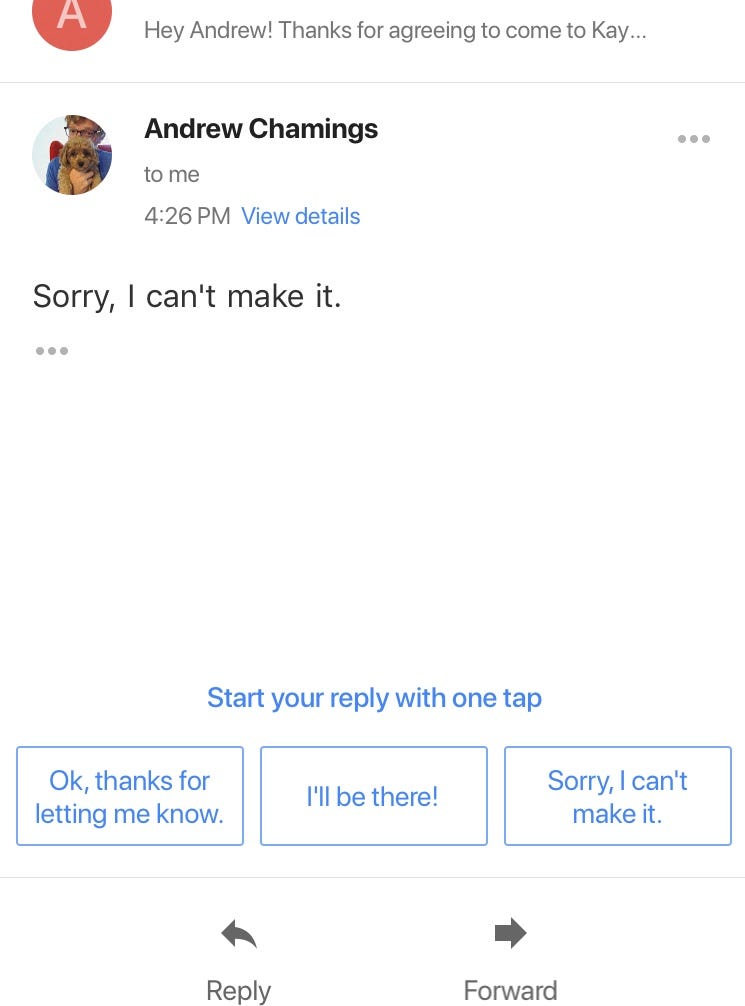

The enthusiasm in the “voice” created for me was pretty accurate—I do use too many exclamation marks. After sending my apologies in one click, I then discovered that a Smart Reply can be responded to with another Smart Reply.

I decided to follow this through with only one-tap responses and see where my two digital Andrews would take the potato chat.

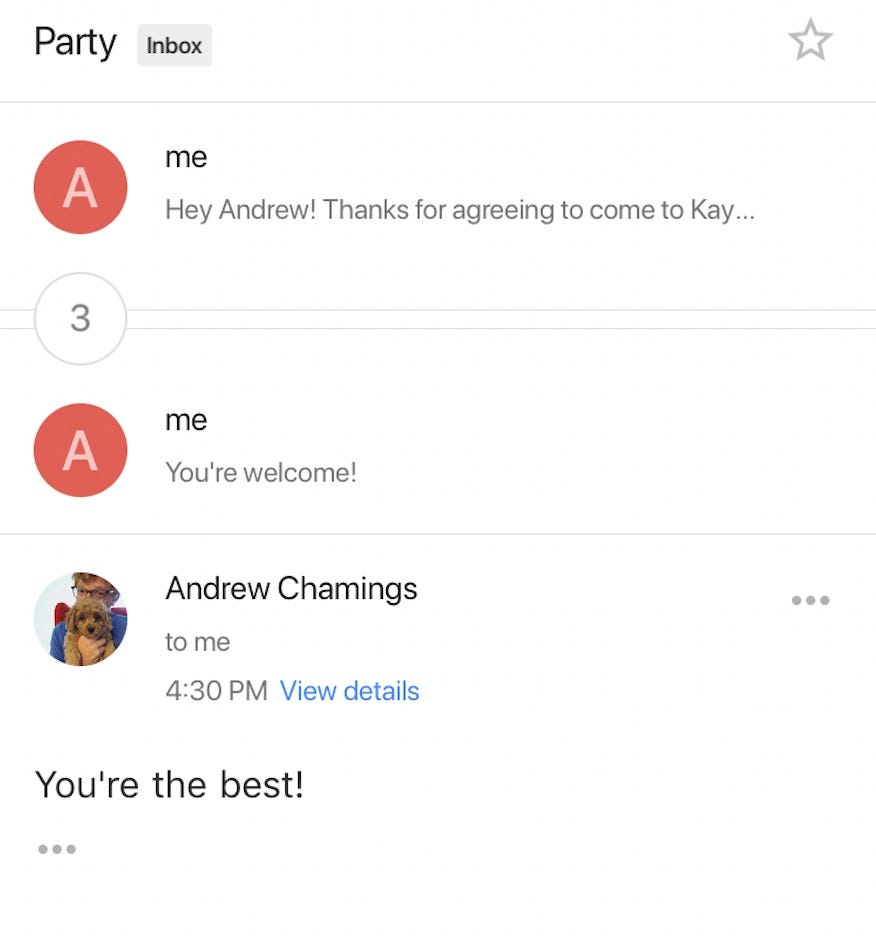

A fatuous exclamation-mark-heavy “Thank you!”, “You’re welcome!” and “You’re the best!” exchange played out. Do I really sound like that?

Ugh, I do, don’t I.

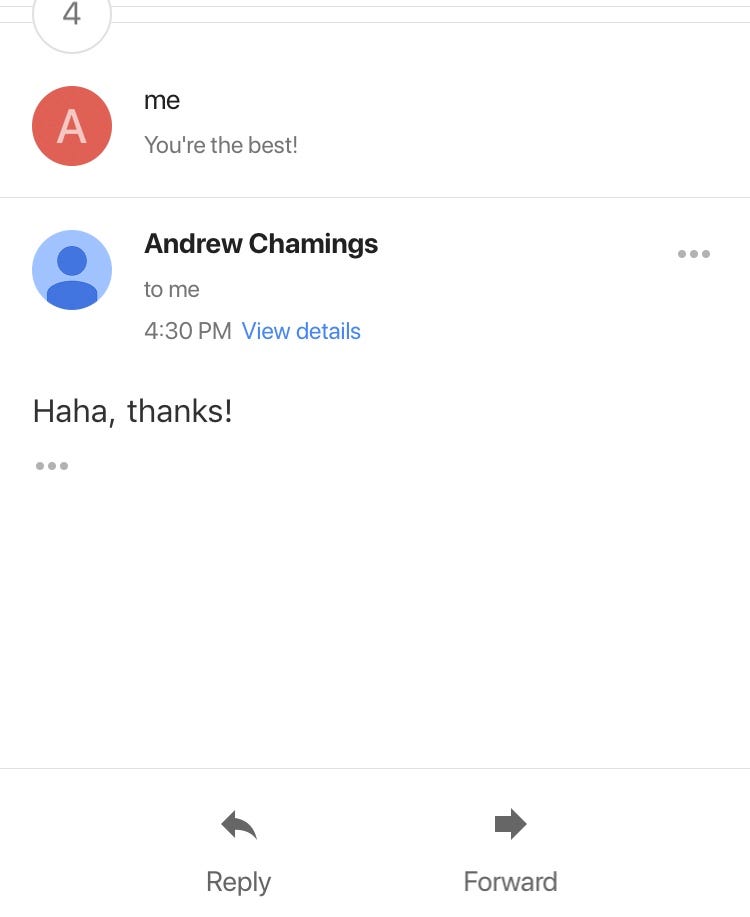

Then something strange happened. My online surrogates seemed to realize that it was all becoming a bit of a joke. A slightly self-referential “haha” appeared in response to the syrupy obsequiousness.

This is exactly what I would have done had this inane politeness carried on for this long between real people. It also knows that I’m a “haha” man — I tend to avoid “LOLs”; I find that “LMFAO” has a certain meanness to it; and I never touch “😂” (the overlap between crying and laughing is way too emotionally complex to sum up in a squirty, grinning yellow ball).

But wait, was that “haha” sarcastic?

It almost feels like an ironic “haha.” Irony is what separates us from the robots! Did they become sentient? Are…are the digital Andrews laughing at me?! I guess it’s important to be able to laugh at yourself.

This is where it ended. There were no possible Smart Replies provided to “Haha, thanks!”

Why had they decided to end it there? Did they know I was onto them? Part of me was sad that it was over. Maybe the digital Andrews had decided to plow back into the depths of my inbox, possibly to answer a work request or tell my neighbor that “I’ll think about” attending his cat’s quinceañera.

Smart Reply is in its infancy and has reportedly been a huge success. Google claims that 12 percent of all emails already use it. It was recently announced that their research team will bring the feature to all chat apps. One development in this new iteration, now simply called “Reply,” is that the AI will use location to inform results. For example, it will build a reply saying that you are “at the restaurant” or “on my way home” by checking your whereabouts.

How does it decide on where to draw the line? What if Reply starts oversharing? “I’m at the McDonald’s drive-thru, gettin’ me nugs,” or “I’m in the boss’s bathroom.”

The replies will likely evolve to be more complex, maybe even incorporate more of your “character” and even have the option to be 100 percent automated to certain incoming emails. In that scenario a fake you is literally existing autonomously.

How far can this go? If I am asked a question, say, “We may be moving to your neighborhood—are rental rates going up there?” and the Smart Reply knows the answer by mining the endless Google knowledge trove, but “I” don’t, should it use that information within the reply? Or should the Smart Reply present an incorrect response, knowing that it’s what I mistakenly believe?

If I select the correct, informed reply, am I a fraud? Should I reveal that the machine answered for me? Should I write, for example, “Rent is going up fast! But I should tell you, I learned this through Smart Reply”? If I did this enough times, the Smart Reply itself would incorporate the acknowledgment of using the Smart Reply. Aaargh. The Smart Reply would be eating itself.

Will this digital mimicry inform other new technology?

When I own a self-driving car, will it parallel-park like I do? (perfectly)

When Google Home asks Alexa to buy my groceries, will it pronounce “gnocchi “like I do? (“guhnotchee”)

Maybe I should just stop worrying and learn to love the automaton.

Haha.

Who said that?

Follow Andrew on Twitter @andrewchamings